“Una invasión de

ejércitos puede ser resistida, no así una idea cuyo momento ha

llegado”

o

¿Tienen futuro los servicios Cloud europeos?, ¿y los

españoles?

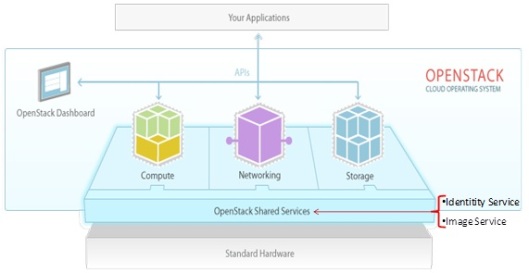

El surgimiento del “Cloud Computing” implica la

redefinición de la forma de trabajar (en el más amplio concepto de la palabra)

con las TIC, tanto para los Proveedores de Servicios, como para los Usuarios de

los mismos (ya sean ciudadanos, ya sean autónomos o profesionales, ya sean los

empleados de una empresa, organismo o entidad de cualquier tipo, ya sean los

departamentos TIC de esas empresas o entidades).

En sus inicios había mucha gente, y hoy en día

aún quedan algunos (especialmente entre las empresa de sector TIC que no han

sabido “hacer sus deberes” y no está aún metidos en el mercado), que decía que

el “

Cloud

Computing” era una palabra de moda (buzzworld) y no hay duda que eso ha

sucedido en muchas ocasiones con conceptos detrás de los cuales no había nada

(nuevo) y que cada vez sale un concepto hay gente que los aprovecha y lo lleva a

límites muy forzados (como

XaaS o “Lo-que-sea as a

Service”).

Sin embargo, el Cloud Computing aprovechando y

combinando los avances de muchas otras tecnologías (en muchos casos subyacentes

dentro del modelo, como la virtualización) responde por primera vez a las

expectativas de los ciudadanos y de las empresas de usar las TIC como una

“Utility” (es decir, como las empresa de servicios eléctricos, gas, agua, etc.).

Y es que, como dijo Victor Hugo, “una invasión de ejércitos puede

ser resistida, no así una idea cuyo momento ha llegado.”

Por ello todos los especialistas coinciden en que

si bien

el concepto

de Cloud Computing aún va a sufrir transformaciones y evolucionar, sin

embargo “no es una moda pasajera, sino que ha llegado para quedarse”. Esa

evolución vendrá tanto de la propia innovación de la industria creando nuevas

aplicaciones y servicios para el usuario final, como de los retos y desafíos, u

obstáculos y barreras, según se mire, que el Cloud Computing aún tiene que

afrontar y resolver: desde la seguridad hasta un cambio cultural, organizacional

o de procesos, pasando por la ausencia de estándares, los problemas de

interoperabilidad, de portabilidad, de “confianza”, de prestaciones, de SLAs, de

regulaciones y legislación, etc.

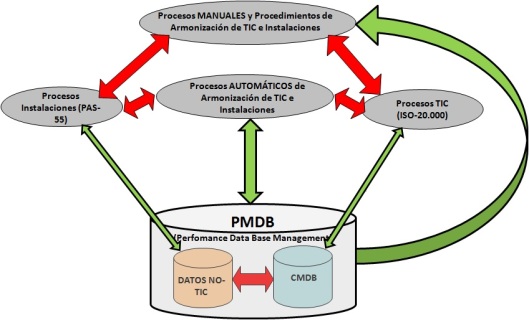

La solución a estos desafíos no está en un

producto, ni en una técnica, ni en un método, ni en un proceso concreto, sino en

una “normalización” de todas las actividades necesarias para garantizar la

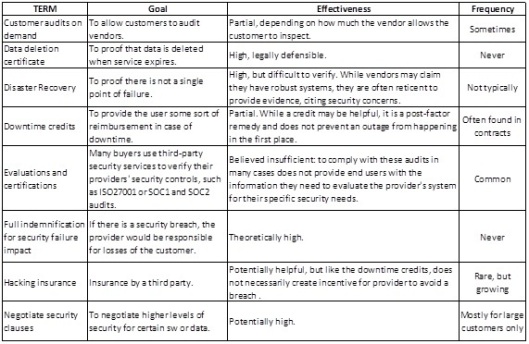

seguridad, y resolver el resto de retos pendientes. Una encuesta reciente de

ENISA (la agencia de seguridad cibernética de la UE) sobre los Acuerdos de Nivel

de Servicio (ANS) mostró que muchos funcionarios en las organizaciones del

sector público apenas reciben observaciones sobre los factores importantes de

seguridad, como la disponibilidad del servicio, o las debilidades del software.

Con el fin de ayudar a solventar este problema, ENISA ha lanzado este mismo año

2012 (en abril) una guía práctica dirigida a los equipos informáticos de

adquisición de servicios TIC, centrándose en la vigilancia continua de la

seguridad en todo el ciclo de vida de un contrato en la “Nube”: se trata de la

“EU Procure Secure: A guide to monitoring of security

service levels in cloud contracts”. La publicación de esta guía se produce

unos poco meses más tarde de que febrero la Administración Norteamericana

publicara la “Federal Risk Assessment Program” (FedRAMP), cuyo objetivo

es evaluar y asegurar el riego mediante la normalización de más de 150 “controles de seguridad” y

con los que se establecen los requisitos de seguridad comunes para la

implementación de Clouds en determinados tipos de sistemas. De esta forma los

proveedores que quieran vender sus servicios a la Administración Federal

Norteamericana deberán adherirse al programa y demostrar que cumple con dichos

controles.

Además de los muchos puntos comunes, la primera

diferencia entre ambos es que mientras que la europea es una “guía”, la

americana incluye, además, un programa de certificación para las empresas que

quiera contratar con su Administración Federal, regulación que no ha sido vista

(por los proveedores) como un obstáculo, sino como un incentivo para el

negocio. Sin embargo, en mi opinión, la principal diferencia es que la FedRAMP

americana es la consecuencia y el paso lógico tras un hecho diferenciador (punto

de ruptura) que es la publicación a finales del 2010 de la ”Cloud First

Policy” con la que la Administración Obama (a través de la Office of

Management and Budget, OMB) decidió impulsar el uso del Cloud Computing entre

todos los organismos federales para poder reducir el coste de los servicios

exigiendo a las Agencias Federales de EE.UU. el uso de soluciones Cloud cuando

las mismas existan y sean seguras, fiables y más baratas.

En una muy reciente revista (junio del 2012), la

Comisionada Europea para la Agenda Digital (Ms. Neelie Kroes) declaró que Europa

no está defendiendo una Nube Europea, sino lo que Europa puede aportar a la

Nube, y aclaró que es un concepto que no contempla fronteras, por lo que la

legislación deberá recoger estos aspectos, sin abandonar los derechos de

protección de los datos personales que asisten a los ciudadanos europeos. Estas

declaraciones suceden a una también reciente publicación de un informe de

Gartner (una de las más prestigiosas empresas consultoras del sector) que afirma

que Europa esta 2 años por detrás de USA en temas de Cloud.

Pese a reconocer que el interés por la Cloud en Europa es muy grande, y

que las oportunidades que el Cloud Computing ofrece son válidas para todo el

mundo, sin embargo, según Gartner, los riesgos y costes del Cloud,

principalmente seguridad, transparencia e integración (lo cuales son aplicables

a todo el mundo), adquieren una idiosincrasia y relevancia especial en Europa

que actúan como frenos (o, al menos, “ralentizadores”) de la adopción del Cloud

en Europa:

- En primer lugar, las diversas (incluso aún cambiantes) regulaciones de los

países europeos obre la privacidad inhiben el movimiento de los datos personales

en la Nube. Este aspecto que, según algunos puede facilitar el predominio de

algunas empresas que basan su negocio en la geolocalización de la Nube dentro de

las fronteras de un país (o zona) sin embargo está produciendo el efecto de que

muchas otras compañías eviten a los Proveedores de Servicios Cloud Europeos

(CSP, o Cloud Services Providers) por miedo a conflictos con la legislación

europea frente a la americana.

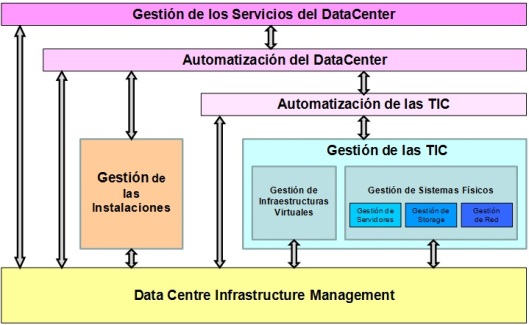

- En segundo lugar la complejidad de la integración de los procesos de negocio

(B2B) en Europa, si bien ha favorecido a algunos Proveedores Europeos, una vez

más esa misma complejidad hace que resulte difícil que se alcance una masa

crítica y por lo tano se ralentice la aparición de empresas que ofrezcan

Servicios Cloud a lo largo de toda Europa.

- En tercer lugar, la lentitud de las prácticas políticas y de los procesos

legislativos paneuropeos, así como la propia variedad legislativa entre los

distintos países obstaculizan el negocio de los CSP.

- Por último, a estos 3 factores anteriores, se une el efecto que sobre las

inversiones produce la crisis de débito existente en la eurozona.

Frente a estos retos, en mi opinión, de momento,

solo tenemos buenas intenciones que se plasman en grandes palabras y pocos

hechos (solo unos buenos pero tímidos pasos y, a mi juicio, insuficientes) de la

Comisión Europea.

Además, en Europa la influencia del sector

público es mucho más importante que en USA (donde el sector privado es, en sí

mismo, mucho más dinámico y ágil). Por ello tanto la Administración de la

Comisión Europea como las distintas Administraciones de los Países miembros

tienen un papel importante, primordial, en el fomento del Cloud Computing en

Europa tanto como usuarios y consumidores de servicio Cloud, como facilitando el

desarrollo del negocio en torno al Cloud Computing. Y es por ello que creo que

en Europa hace falta definir una “Política Cloud” que propicie claramente el uso

del Cloud en todas las Administraciones Públicas Europeas, tanto de la Comisión

como de los Países Miembros (al estilo de la “First Cloud Policy” de EE.UU.),

de forma que se fomente el mercado Cloud tanto para los proveedores de

servicios (CSP) como las empresas consumidores de dichos servicios, así como las

inversiones en Investigación y Desarrollo en esta área. La Comisionada Europea,

Ms. Kroes, afirmaba que Europa está llena de gente con talento para conseguirlo,

pero sin duda se necesita que haya un mercado que lo demande y una

regulación-legislación que lo permita, si no de nuevo “perderemos el tren”.

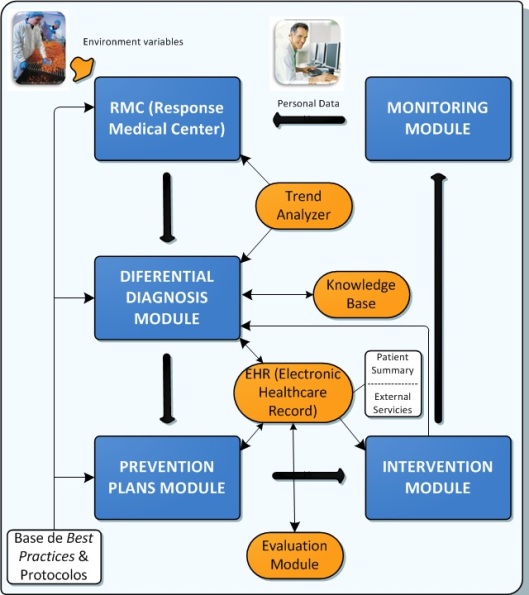

En lo que a nuestro país concierne, el “Informe

de Recomendaciones para la Agenda Digital en España” (presentado hace escasos

días, el 18 de junio, y elaborado por un Grupo de Expertos a quien el Gobierno

encomendó su elaboración) reconoce que “España se enfrenta a una crisis de

su economía marcada por la particular configuración de los riesgos endémicos

–burbuja inmobiliaria, crisis financiera, etc.-, la existencia de deficiencias

estructurales y los desequilibrios respecto a otras economías centrales de la

zona euro, elementos que están amplificando los efectos negativos de la adversa

coyuntura internacional”. También afirma que “la adopción inteligente

de tecnologías digitales permitirá impulsar el crecimiento, la innovación y la

productividad, contribuyendo a evitar que se trunque la trayectoria de

transformación y modernización que ha experimentado la economía española en las

últimas décadas.” Y entre los principales factores de cambio destaca en

primer lugar “la transición al cloud computing como mecanismo de

entrega eficiente de servicios”, sin olvidar otros tan importantes

como “la generalización de la movilidad, el aumento en la disponibilidad de

banda ancha ultrarrápida, el desarrollo de la Internet de las Cosas, y el amplio

uso de dispositivos que, como smartphones y tablets.

Sin embargo, mientras en el Reino Unido el

Gobierno ha creado hace unos meses el

”UK CloudStore” (un sistema

diseñado para facilitar, simplificar y abaratar el proceso de selección y

provisión de servicios Cloud para los sector público), en España llevamos más de

10 años sin renovar el “Catálogo de Patrimonio” para la provisión de servicios

de DataCenter, entre los que esperamos, cuando se saque el Concurso

correspondiente, estén integrados los Servicios de Cloud Computing (al menos los

de tipo IaaS, es decir “Infrastructures as a Service”); y deseemos además que su

resolución no conlleve un proceso de más de 2 años (por los recursos planteados)

como ha sucedido con el Concurso para “Desarrollo de Sistemas de

Información”.

Por último mi mayor deseo es que mis palabras

sean tan efímeras, o más que los datos estadísticos que se recogen y analizan en

este informe, necesarios pues reflejan la situación y conocimiento del Cloud

Computing en las PYMEs y apuntan sobre qué aspectos trabajar para mejorar la

situación, pero que todos deseamos que pierdan su vigencia cuanto antes, pues

ese hecho significará una gran señal de progreso para el mercado TIC español en

particular, y para la evolución de la economía española en general.

Nota Final: Para reflejar mejor la situación en España debo aclarar que, tras la publicación del mencionado

informe/libro del que he copiado este artículo, ha sido convocado (y aún está en

proceso de licitación) el Concurso para entrar en Catálogo de Patrimonio del

Estado para “Servicios de alojamiento de sistemas de información” que respecto a

los Servicios Cloud establece, cito textualmente, que: “Los licitadores

podrán indicar en su oferta si están en condiciones de realizar servicios de

alojamiento basados en cloud computing en el caso de ser adjudicatarios. Dichas

condiciones se trasladarían a los contratos basados en el acuerdo marco que

contemplen esa posibilidad. La inclusión de ese tipo de soluciones no es

obligatoria para licitar.”

y pueden obtenerse de forma gratuita participando en la siguiente oleada de encuesta.

y pueden obtenerse de forma gratuita participando en la siguiente oleada de encuesta.